Today’s Contents

🚀 Mastering Prompt Frameworks Across AI Models

🧰Tech Toolbox

🚀 Mastering Prompt Frameworks Across AI Models

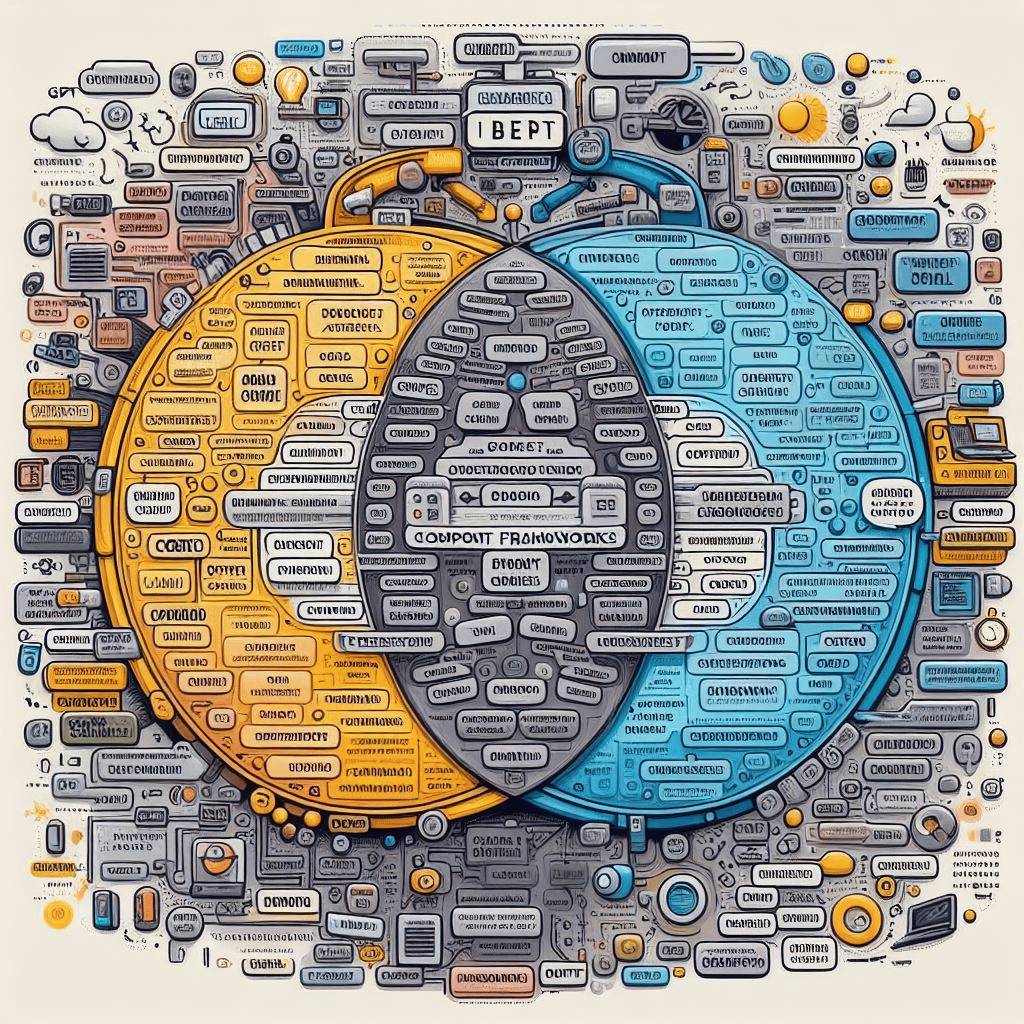

Prompt frameworks serve as the compass guiding our interactions with AI models. Whether you’re navigating ChatGPT, Gemini, Claude, or Microsoft Copilot, understanding these frameworks empowers you to unlock their full potential. Let’s explore them without further ado:

Prompt Design and Engineering: Introduction and Advanced Methods

Prompt design and engineering have rapidly become essential for maximizing the potential of large language models (LLMs). In this article, we’ll introduce core concepts, delve into advanced techniques, and explore the principles behind building LLM-based agents. Buckle up as we journey through the world of prompts!

1. What is a Prompt?

A prompt in generative AI models serves as the textual input provided by users to guide the model’s output. It can range from simple questions to detailed descriptions or specific tasks. For instance:

In image generation models like DALL·E-3, prompts are often descriptive.

In LLMs like GPT-4 or Gemini, prompts can vary from straightforward queries to complex problem statements.

Prompts typically consist of:

Instructions: How the model should answer the question.

Questions: The queries posed to the model.

Input data: Relevant information.

Examples: Illustrative samples.

To elicit a desired response, a prompt must contain either instructions or questions (other elements are optional). Basic prompts can be as simple as asking a direct question, while advanced prompts involve more complex structures, such as “chain of thought” prompting.

2. Examples of Basic Prompts

Let’s explore some basic prompt examples using ChatGPT-4:

Instructions + Question:

Prompt: “How should I write my college admission essay? Give me suggestions about the different sections I should include, what tone I should use, and what expressions I should avoid.”

Output: (Generated response based on the prompt)

Instructions + Input:

Prompt: “Given the following information about me, write a 4-paragraph college essay: I am originally from Barcelona, Spain. While my childhood had different traumatic events, such as the death of my father when I was only 6, I still think I had quite a happy childhood. During my childhood, I changed schools very often, and attended all kinds of schools, from public schools to very religious private ones.”

Output: (Generated essay based on the input data and instructions)

Prompt frameworks serve as the compass guiding our interactions with AI models. Whether you’re navigating ChatGPT, Gemini, Claude, or Microsoft Copilot, understanding these frameworks empowers you to unlock their full potential. Let’s explore them without further ado:

1. R-T-F: Role-Task-Format

Role ®: Define the persona the AI model assumes (e.g., copywriter, analyst).

Task (T): Specify the desired action (e.g., create an ad, summarize data).

Format (F): Determine the output style (e.g., headline, paragraph).

Example:

“ChatGPT, as a copywriter, craft a captivating headline for our new product.”

Benefits:

Intuitive.

Ideal for creative tasks.

2. T-A-G: Task-Action-Goal

Task (T): Clearly state the objective.

Action (A): Describe the necessary steps.

Goal (G): Define the desired outcome.

Example:

“Gemini, summarize this research paper concisely and accurately.”

Benefits:

Precision.

Effective for problem-solving.

3. B-A-B: Before-After-Bridge

Before: Present the problem or context.

After: Offer the solution.

Bridge: Seamlessly connect the two.

Example:

“Claude, explain neural networks, provide an example, and tie it all together.”

Benefits:

Enhances readability.

Ideal for instructive prompts.

4. R-I-S-E: Rules-Input-Examples-Expectations

Rules: Specify criteria or guidelines.

Input: Describe the context or data.

Examples: Illustrate with relevant samples.

Expectations: Set clear output expectations.

Example:

“Microsoft Copilot, given Python code, adhere to PEP-8, and generate well-formatted code.”

Benefits:

Precise communication.

Guides the model effectively.

Remember, adapt these frameworks judiciously based on context, complexity, and model strengths. Now, let’s wield our prompts with purpose! 🚀🤖✨

Here are five AI tools that specialize in prompt frameworks and engineering:

Agenta

Summary: Agenta is an open-source platform for experimenting with, evaluating, and deploying large language models (LLMs). It allows developers to iterate on prompts, parameters, and strategies to achieve desired outcomes.

Website: Agenta on GitHub

PromptPerfect

Summary: PromptPerfect focuses on improving prompt quality to achieve consistent results from LLMs. Developers can deploy prompts to PromptPerfect’s server and access APIs for their own applications.

Website: Not specified.

OpenAI API (Prompt Engineering)

Summary: OpenAI provides strategies and tactics for better results from large language models (like GPT-4). Techniques include clear instructions, persona adoption, reference text, and splitting complex tasks.

Website: OpenAI API - Prompt Engineering

TopAI.Tools Prompt Engineering Software

Summary: A curated list of AI tools for prompt engineering software. Compare them based on use cases, features, and pricing.

Website: TopAI.Tools - Prompt Engineering Software

Deepgram AI Apps for Prompt Engineering

Summary: Deepgram offers AI apps and software for prompt engineering. Explore their selection for effective prompt creation.

Remember to explore these tools based on your specific needs and use cases! 🚀🤖